Abstract

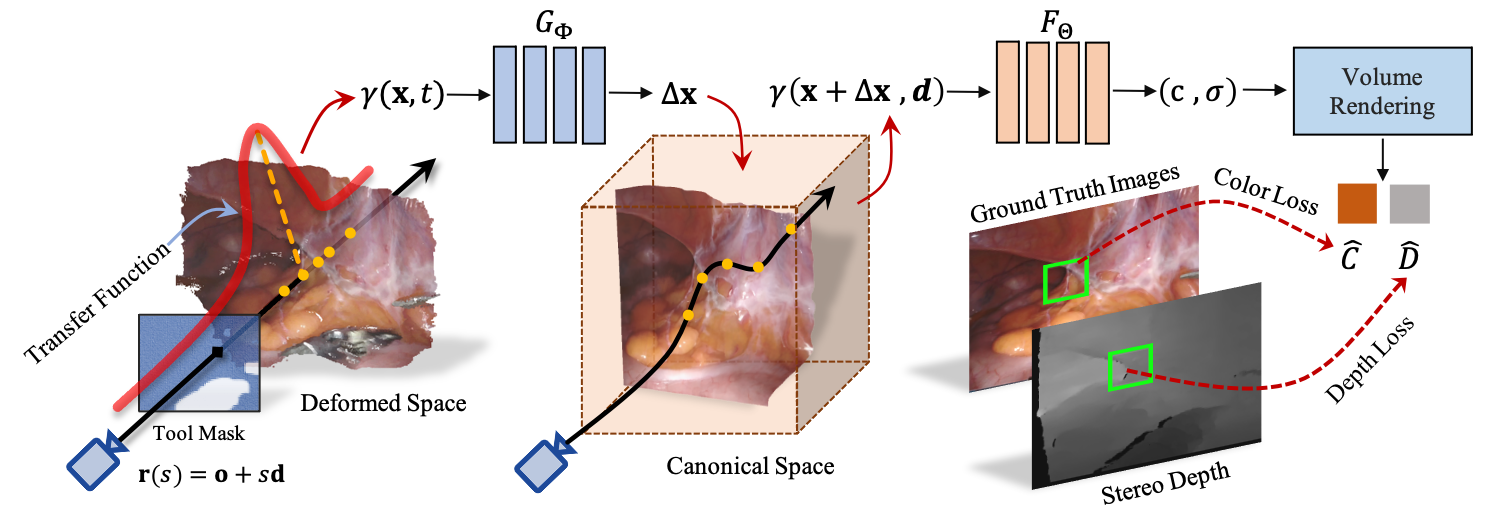

Reconstruction of the soft tissues in robotic surgery from endoscopic stereo videos is important for many applications such as intra-operative navigation and image-guided robotic surgery automation. Previous works on this task mainly rely on SLAM-based approaches, which struggle to handle complex surgical scenes. Inspired by recent progress in neural rendering, we present a novel framework for deformable tissue reconstruction from binocular captures in robotic surgery under the single-viewpoint setting. Our framework adopts dynamic neural radiance fields to represent deformable surgical scenes in MLPs and optimize shapes and deformations in a learning-based manner. In addition to non-rigid deformations, tool occlusion and poor 3D clues from a single viewpoint are also particular challenges in soft tissue reconstruction. To overcome these difficulties, we present a series of strategies of tool mask-guided ray casting, stereo depth-cueing ray marching and stereo depth-supervised optimization.

Method

Key challenges in 3D reconstruction of endoscopic surgery scenes: First, surgical scenes are deformable with tremendous topology changes, requiring dynamic reconstruction to capture a high degree of non-rigidity. Second, endoscopic videos show sparse viewpoints due to constrained movement in confined space, resulting in limited 3D clues of soft tissues. Third, the surgical instruments always occlude part of the soft tissues, affecting the completeness of reconstruction. To address these challenges, we embark on adapting the emerging neural rendering framework to the regime of deformable surgical scene reconstruction.

Our main contributions: 1) To accommodate a wide range of geometry and deformation representations on soft tissues, we leverage neural implicit fields to represent dynamic surgical scenes. 2) To address the particular tool occlusion problem in surgical scenes, we design a new mask-guided ray casting strategy for resolving tool occlusion. 3) We incorporate a depth-cueing ray marching and depth-supervised optimization scheme, using stereo prior to enable neural implicit field reconstruction for single-viewpoint input.

Results and Comparisons

We test our proposed method on typical robotic surgery stereo videos from 6 cases of our in-house DaVinci robotic prostatectomy data. For comparison, we take the most recent state-of-the-art surgical scene reconstruction method of E-DSSR as a strong comparison. Qualitative and quantitative comparisons between our method and E-DSSR are exhibited as below. Note that PSNR and SSIM are photometric errors between projections of reconstructed point clouds and ground truth images.

Bibtex

If you find this work helpful, you can cite our paper as follows:

@inproceedings{wang2022neural,

title={Neural Rendering for Stereo 3D Reconstruction of Deformable Tissues in Robotic Surgery},

author={Wang, Yuehao and Long, Yonghao and Fan, Siu Hin and Dou, Qi},

booktitle={International Conference on Medical Image Computing and Computer-Assisted Intervention},

pages={431--441},

year={2022},

organization={Springer}

}