.gif)

.gif)

.gif)

.gif)

.gif)

.gif)

.gif)

.gif)

.gif)

.gif)

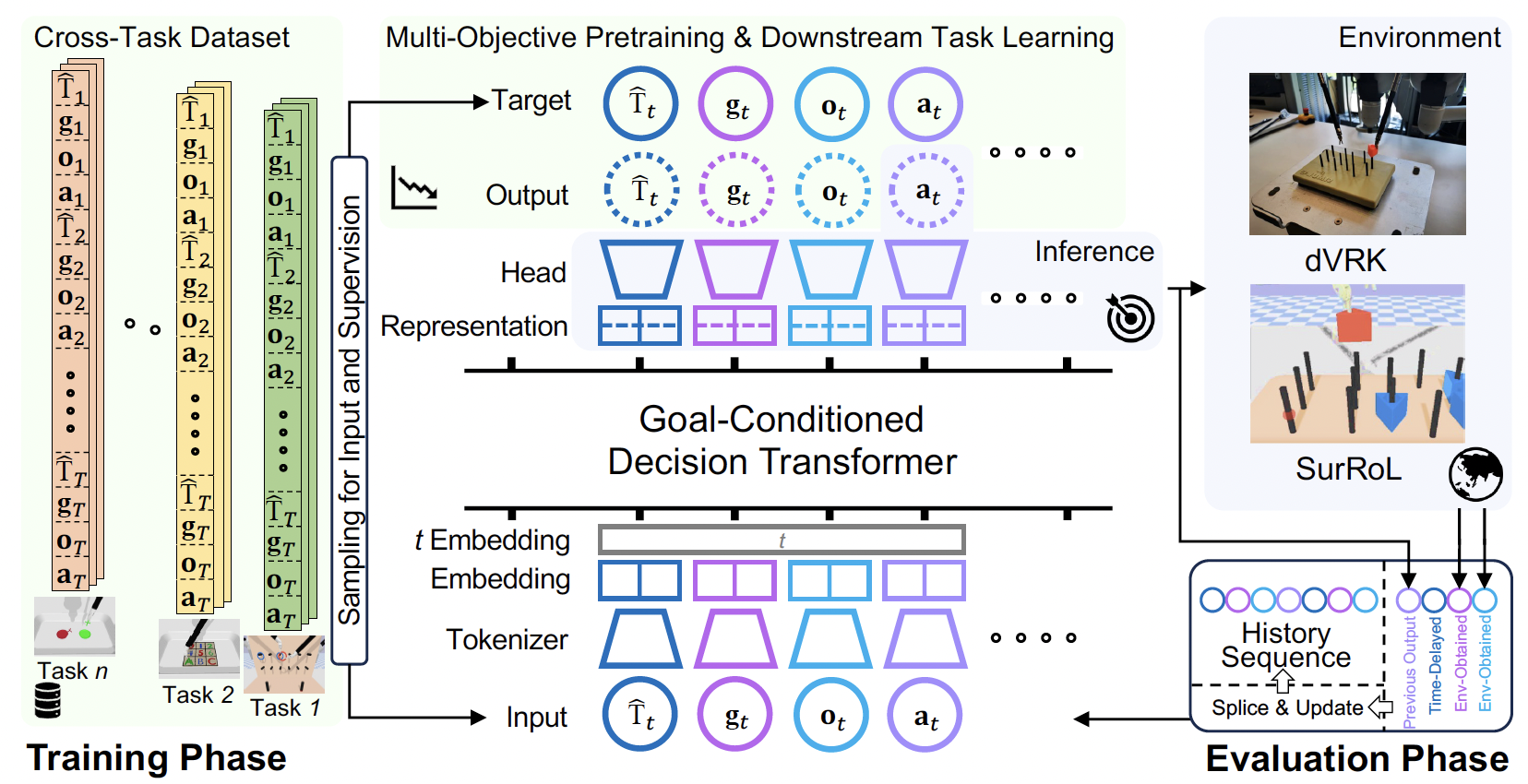

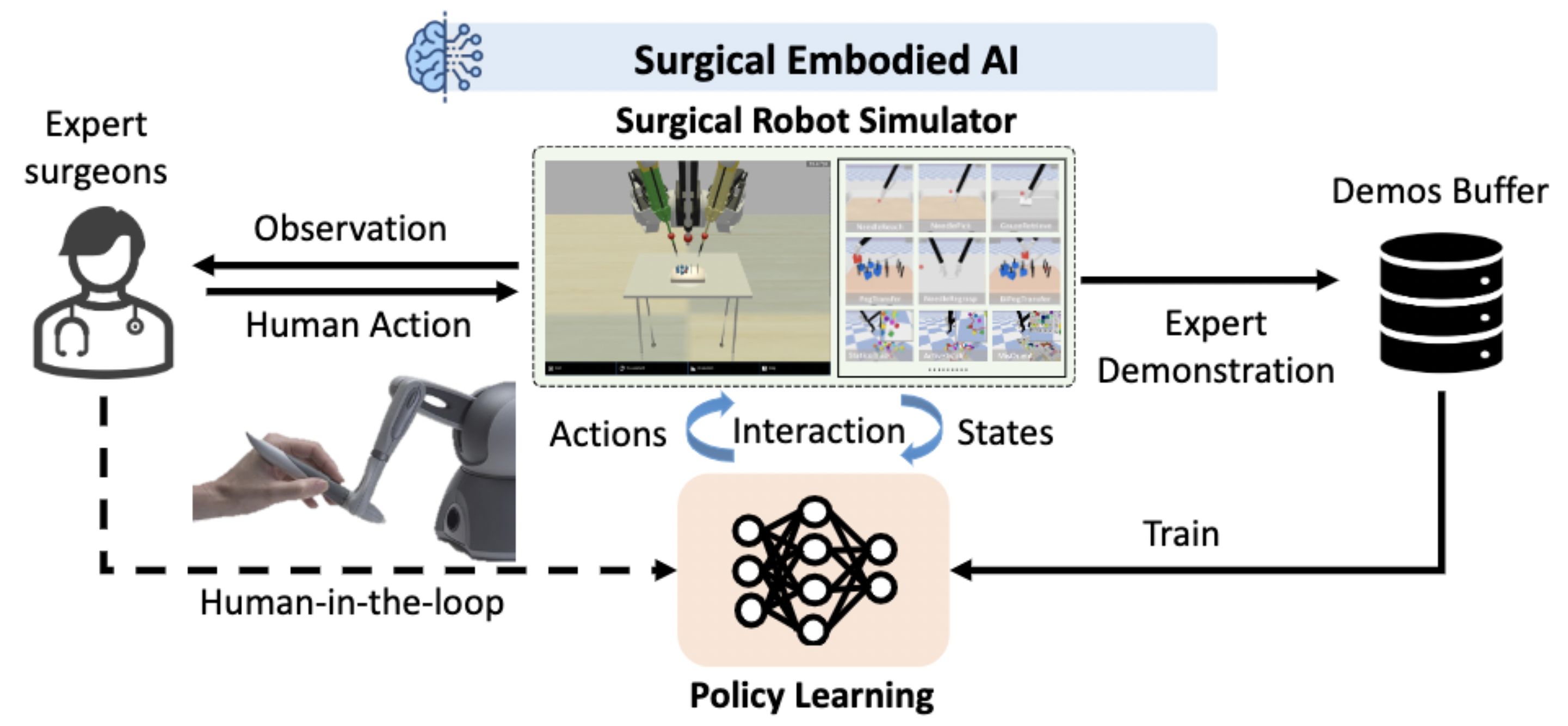

Surgical robot automation has attracted increasing research interest over the past decade, expecting its huge potential to benefit surgeons, nurses, and patients. Recently, the learning paradigm of embodied AI has demonstrated a promising ability to learn good control policies for various complex tasks, where embodied AI simulators play an essential role in facilitating relevant research. Despite visible efforts that have been made on embodied intelligence in general, surgical embodied intelligence, which should be supported by well-developed and domain-specific simulation environments, has been largely unexplored so far. In this regard, we designed SurRoL, a simulation platform that is dedicated to surgical embodied intelligence with strong focus on learning algorithm support and extendable infrastructure design. With which we hope to pave the way for future research on surgical embodied intelligence.

(Included in dVRK Software Ecosystem as a community open-source ML tool)

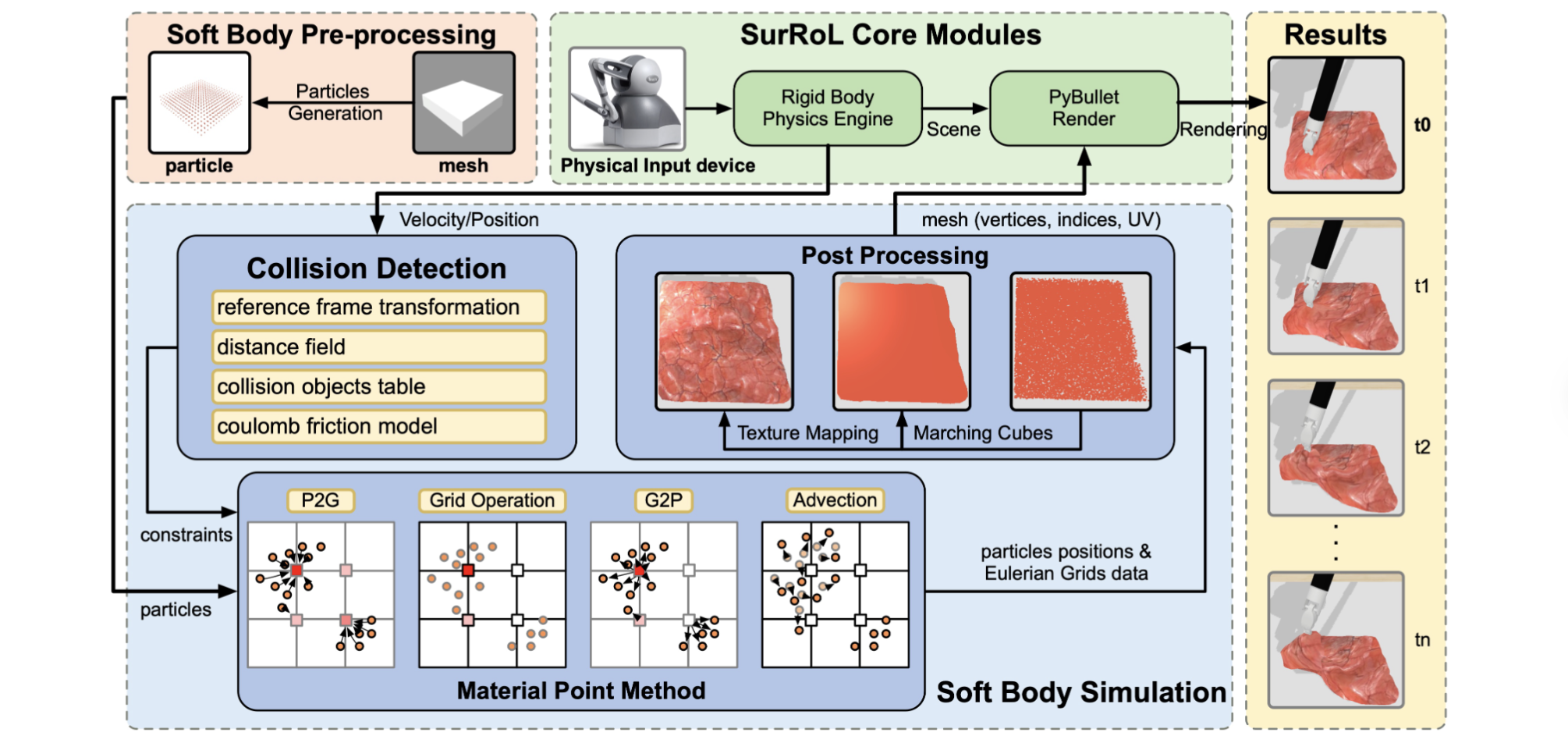

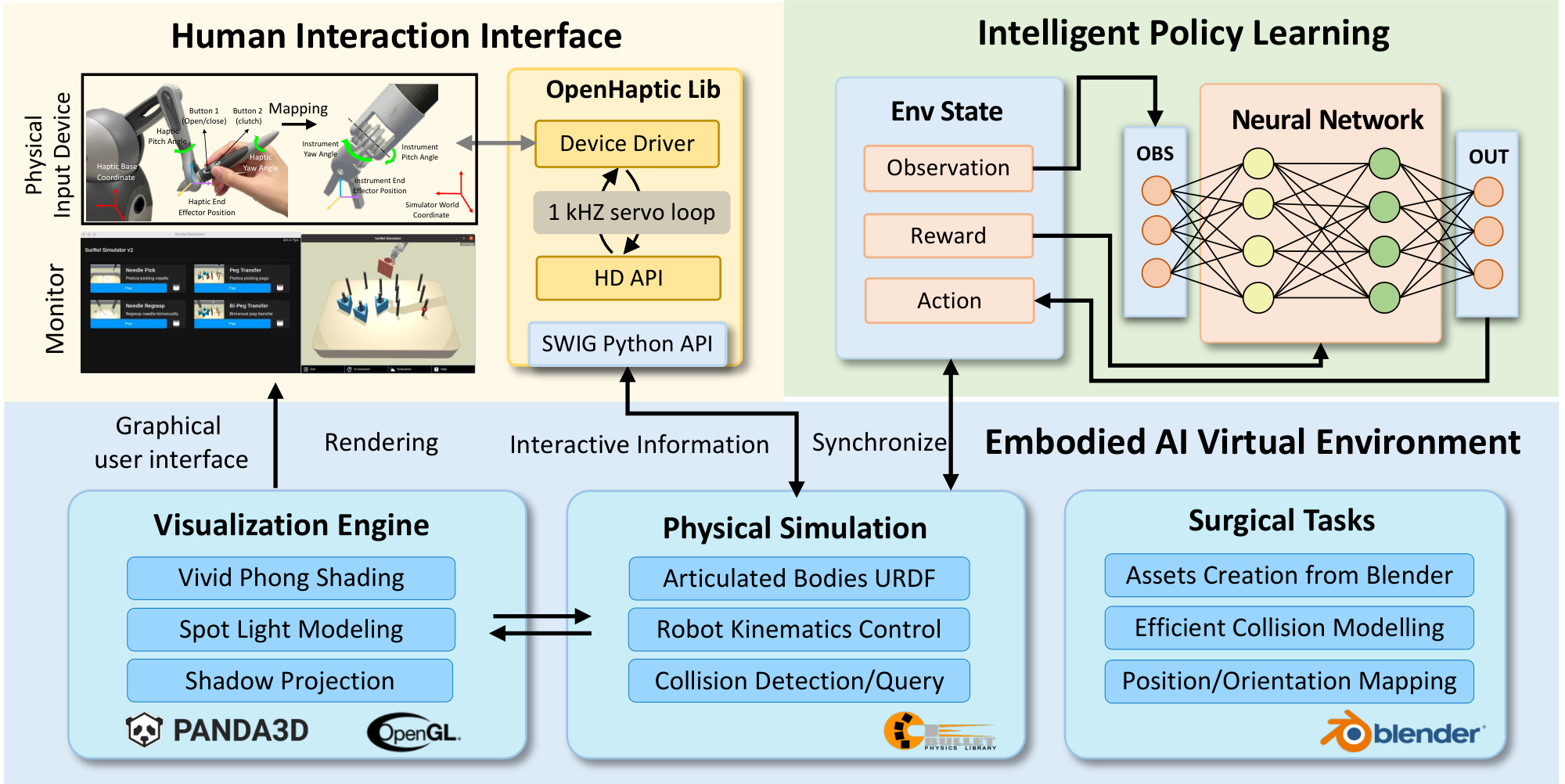

In SurRoL, we create assets of surgical tasks with the help of the powerful 3D modeling tool Blender, and then generate relevant collision models and urdf description models at the same time for physical modeling in the simulation environment. Once surgical tasks are imported to the simulator, the human can conduct surgical action using a human interaction device, and the interaction information is streamed to the virtual environment for physical simulation. In the meanwhile, the video frames are produced using the visualization engine of the simulator, which will be displayed on the monitor for human perception and interaction. The human action can be recorded for intelligent policy learning and the policy can also interact with the virtual environment, which forms the diagram of human-in-the-loop surgical robot learning with interactive simulation environment.

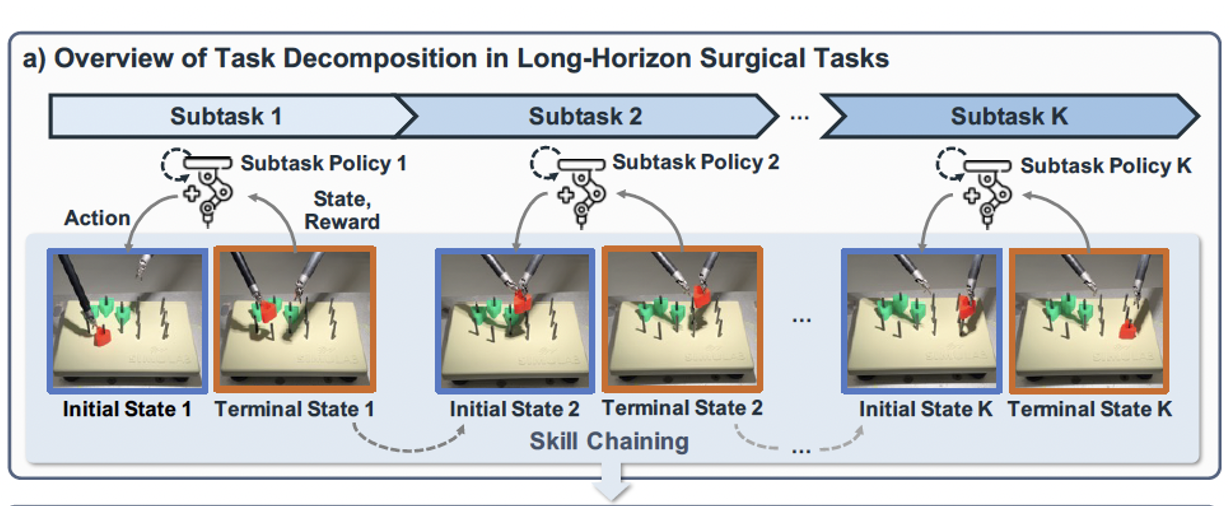

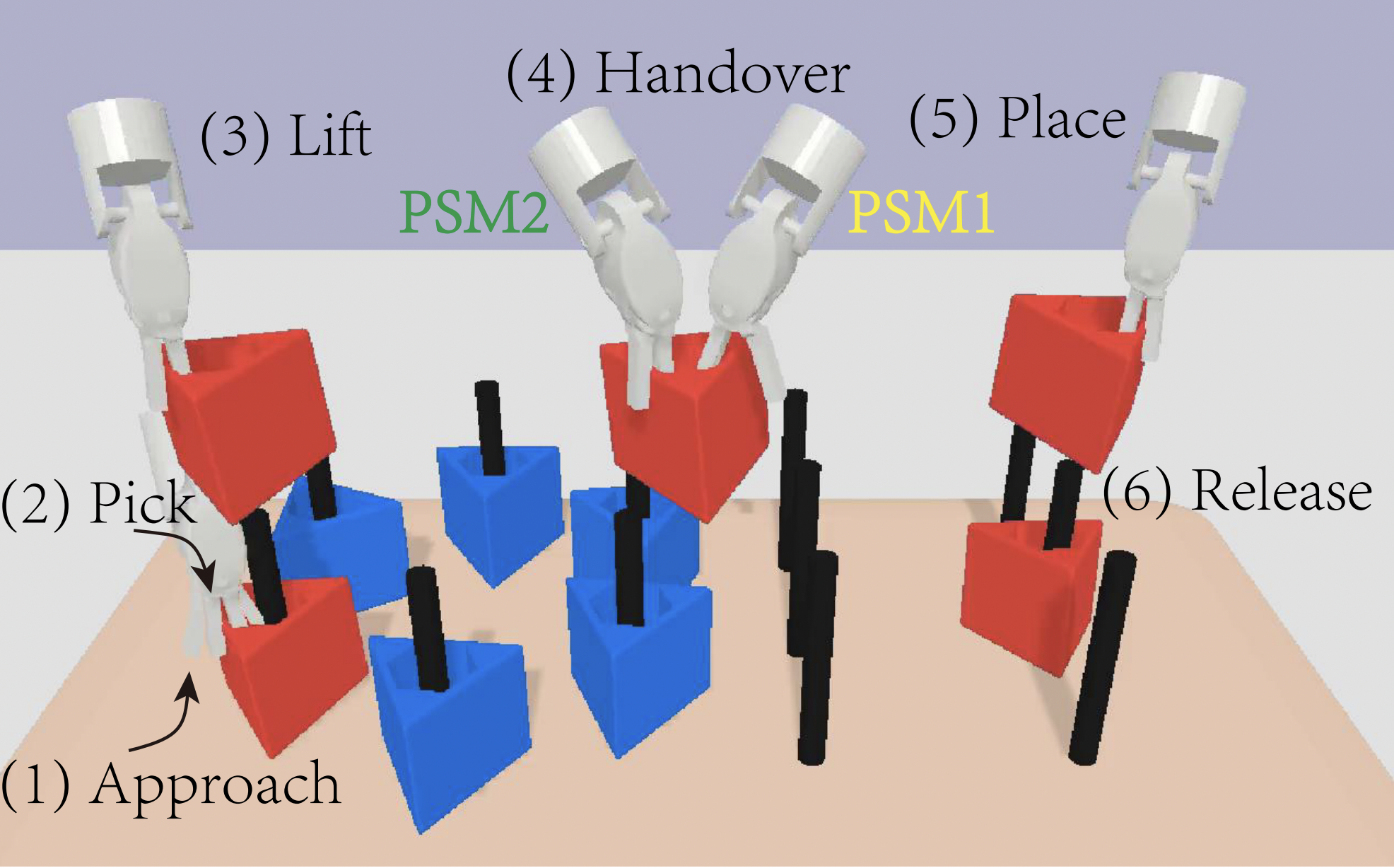

We have established a spectrum of tasks given the dexterity and precision properties in the surgical context.

In detail, SurRoL has Fundamental Action Tasks: Needle Reach, Gauze Retrieve, Needle Pick and Needle Regrasp; ECM Fov Control Tasks: ECM Reach, MisOrient, Static Track and Active Track; and Basic Surgical Robot Skill Training Tasks: Peg Transfer, Bimanual Peg Transfer, Needle the Rings, Pick and Place, Peg Board and Match Board. These 14 tasks range from entry-level to sophisticated counterparts, which cover levels of surgical skills and involve manipulating PSM(s) and ECM.

To incorporate human control of surgical robots in the simulator, we develop a manipulation system with human input devices. We use Touch (3D Systems Inc.) physical devices to simulate two master arms of the robot to tele-operate Patient Side Manipulators (PSMs) and the Endoscopic Camera Manipulator (ECM).

ECM Control

Bimanual PSMs Control

We also integrated SurRoL into the real world dVRK to enable human control of both real world PSMs and the counterparts in SurRoL at the same time.

Single-handed PSM Control

Bimanual PSMs Control

Below are the trajectories of four learned policies on the real dVRK which demonstrate that our platform enables the smooth transfer of learned skills from the simulation to the real world.